Wolf & Crow Pipeline

Tools

A pipeline in record time

Wolf & Crow in Santa Monica decided to push further into the world of feature films by taking on a couple of fully-animated sequences for the 2015 fantasy film “Pan,” and they needed a CG production pipeline spun up fast in order to allow their artists to collaborate with minimal fuss. I was called in to build it up as quickly as I could, and later on, dove into the production itself to help finish off some FX shots before the final deadline.

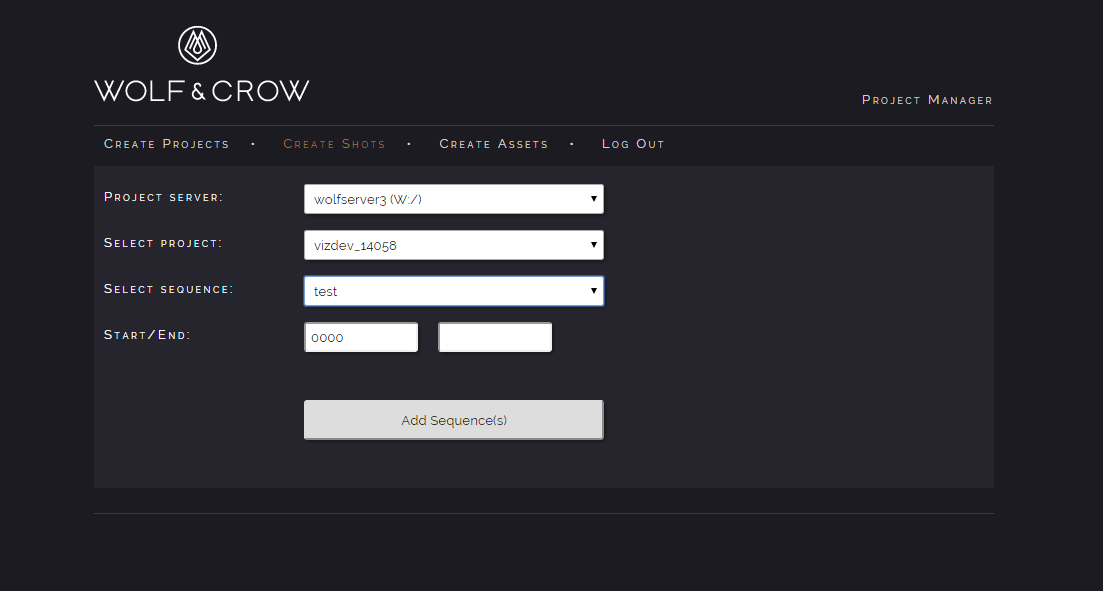

After throwing together a quick back-end system of command line tools to define and organize project structures on disk and create new shots and sequences, I got to work on the artist-facing tools. A web-based frontend leveraging jQuery and PHP allowed producers to run project-level commands without them having to interact with the command line.

Tracking assets and changes

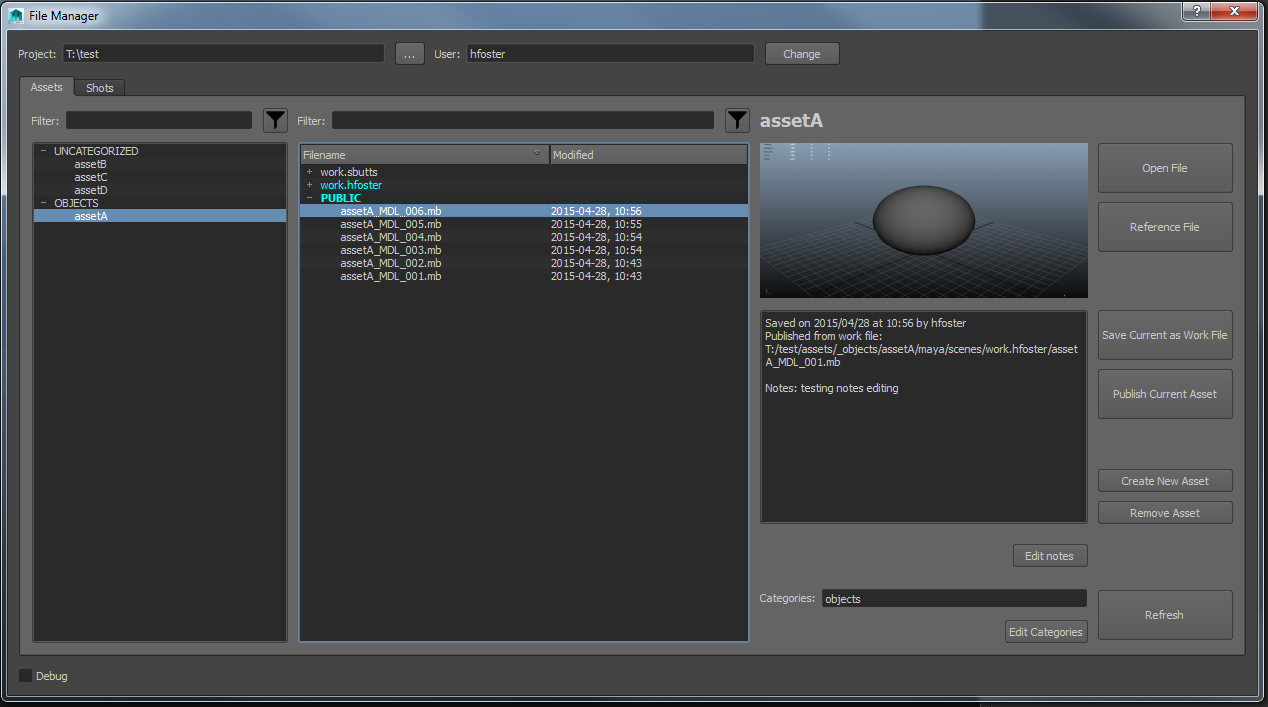

For the Maya artists on the team, several tools were built to facilitate asset sharing and modular scene construction. A “File Manager” allowed artists to create assets and later “publish” them for other users to reference in their own scenes. A dynamic tagging system let users organize how assets were displayed in the File Manager without modifying the files themselves, so that assets could be recategorized as the project evolved without modifying paths on disk. Each asset’s entire published history was saved and displayed so that artists or producers could track changes to assets over time.

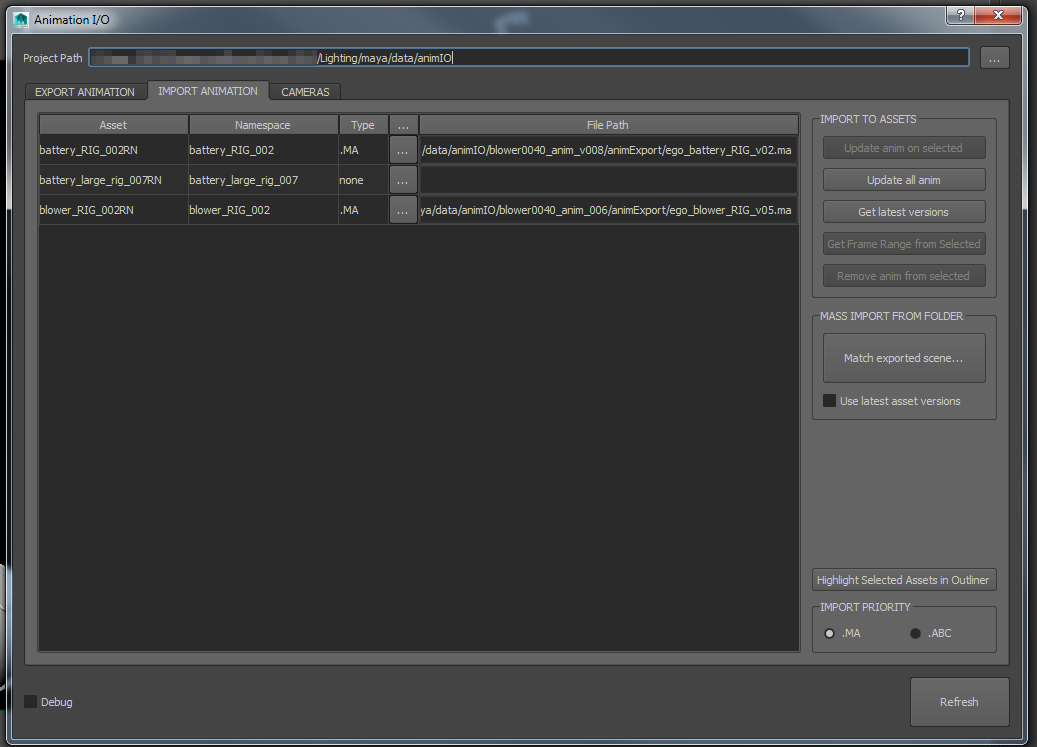

Breaking up complex scenes into shareable data

I built other tools to separate animation data and shading data into their own publishable objects, so that animators and lighters could work simultaneously without interruption. Once an animator published animation data, a lighter could match their scene to exactly what the animator exported without needing to know what the animator changed, and without any of the extraneous data and mess that can exist in an animator’s scene while they’re quickly iterating! The “Look Manager” tool saved information about shading assignments, displacement sets, layer overrides, and the like to external files that could be published and shared, making it easy to update materials for multiple shots in a sequence.

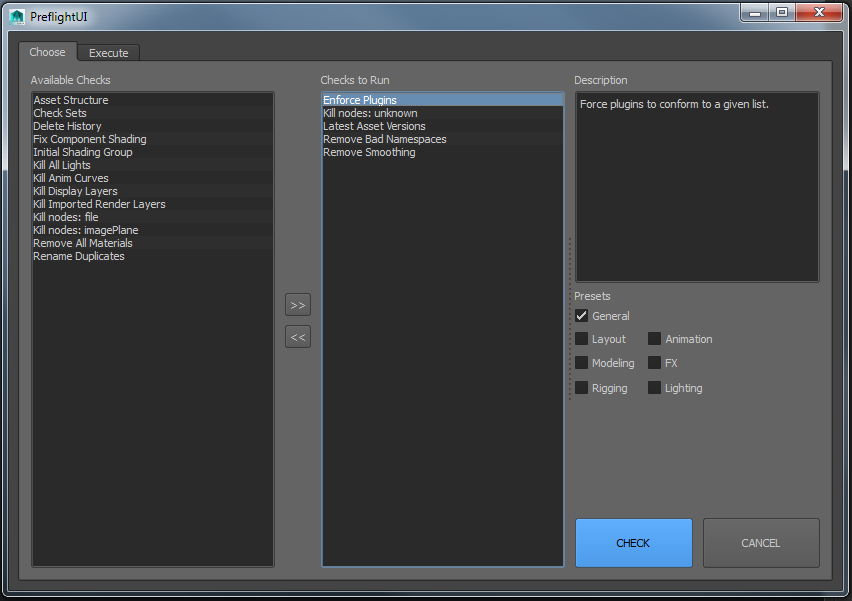

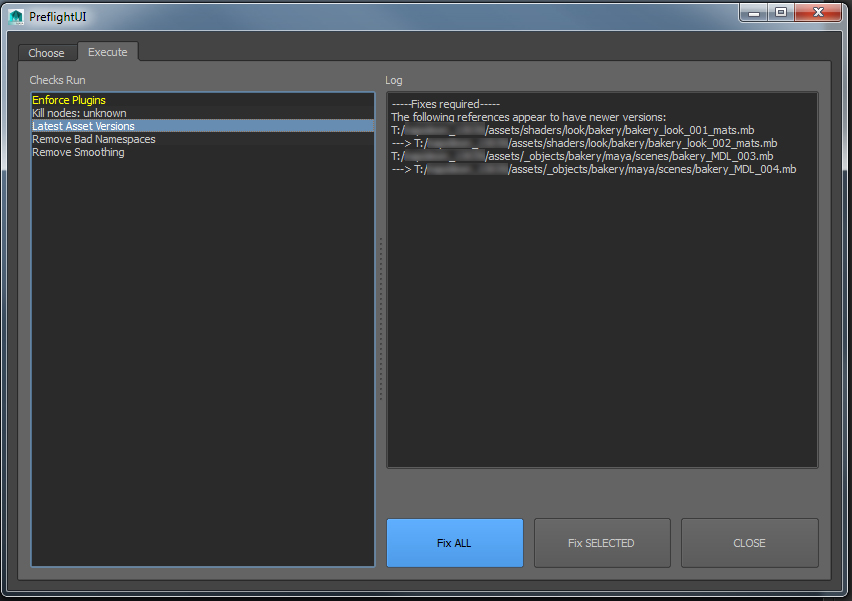

Sanity checks

Another tool, the Preflight UI, ensured that all published data met the pipeline standards so that things would stay smooth and predictable during production. A directory of “sanity check” scripts was dynamically loaded depending on the type of data to be published, and these checks would report back with warnings or errors if the data didn’t pass muster. Any TD could add additional checks to this list and categorize them for specific types of publishes, so that the exact pipeline for each type of publish could be easily customized.

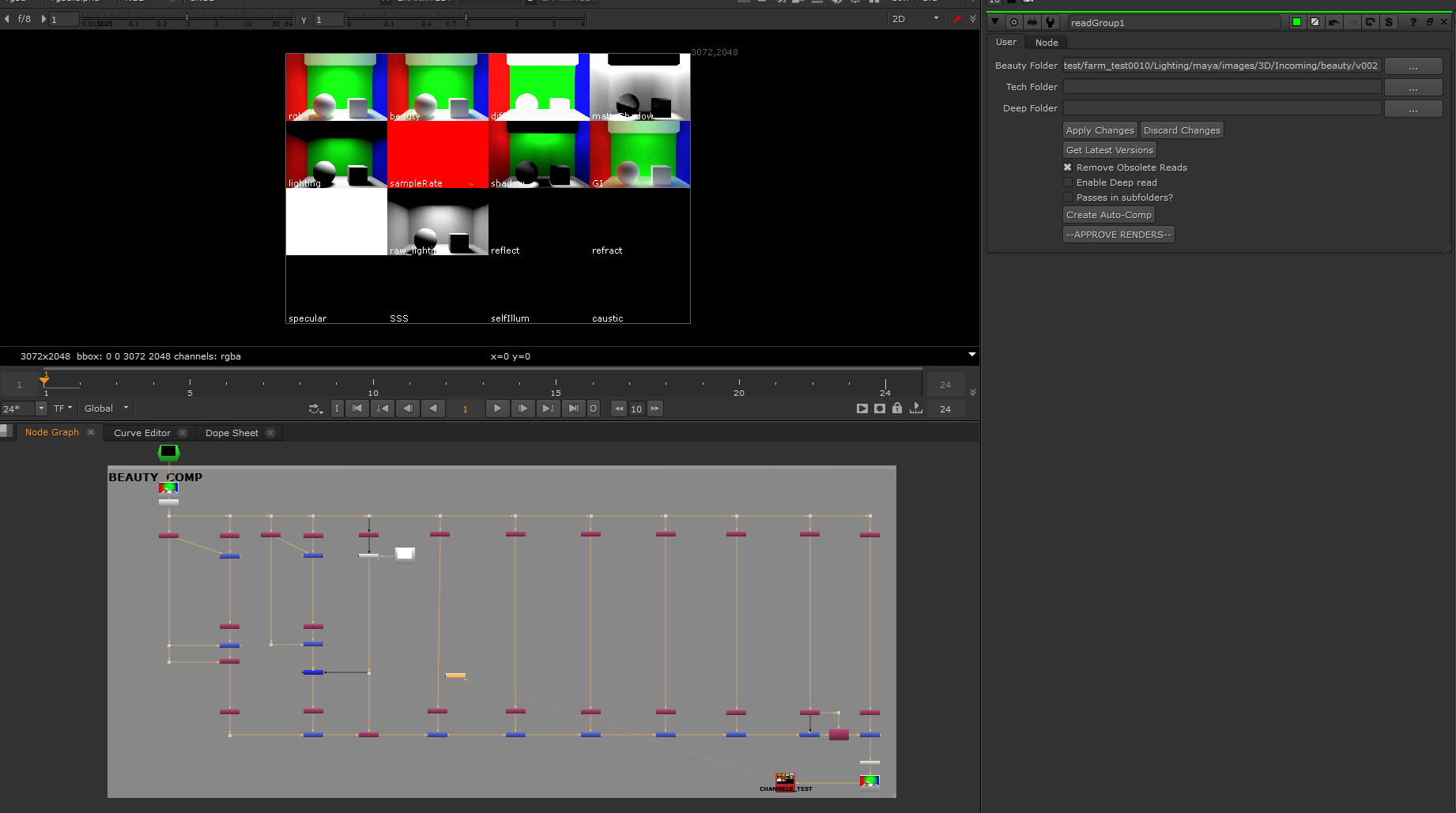

The last part of the process involved checking and publishing renders. To assist with this check, I built an Auto-Compositor tool for Nuke to quickly split up the render passes and generate a contact sheet from them. Once the lighter verified that the passes were constructed correctly, they could publish the renders directly from the Auto-Compositor group node, or even use it as an easy starting point for their own shot composites.

Modular tools make the best pipelines

All of the tools were built to be as modular as possible while sharing a common language (the environment variables and workspace), so one tool’s problems wouldn’t affect the functionality of the rest of the pipeline. Most tools could even be used piecemeal outside the pipeline with few exceptions; an included settings.py file could customize locations and variables to work just about anywhere.

A more detailed breakdown of the pipeline is available on my blog.